Perceived Course Rigor in Sport Management: Class Level, Course Grades, and Student Ratings

By James E. Johnson, Robert M. Turick, Michael F. Dalgety, Khirey B. Walker, Eric L. Klosterman, and Anya T. Eicher. All authors are based at Ball State University in Muncie, Indiana.

During the last half-century, critics of higher education have disparaged institutions for their declining standards and lack of rigor. The U.S. has slipped in educational rankings while popular culture has glorified the social aspects of college above the intellectual (Arum & Roksa, 2011). Caught in the middle, particularly as higher education has adopted more business-centric models, are faculty.

While many faculty and administrators strive for high standards, worry over receiving poor student ratings may influence some faculty to lower their expectations/standards. For example, the grading leniency hypothesis (Marsh & Duncan, 1992) suggests that faculty will inflate grades out of fear of retributional bias (Feldman, 2007) on student ratings. This stress could become amplified when tenure and promotion are at stake and student perceptions are the primary evaluative source for teaching performance. In sport management, where some programs must combat the easy major label, this issue can become complex. These beliefs are in contrast to the validity theory (Marsh & Duncan, 1992) that suggests students value a rigorous academic experience and rate faculty accordingly.

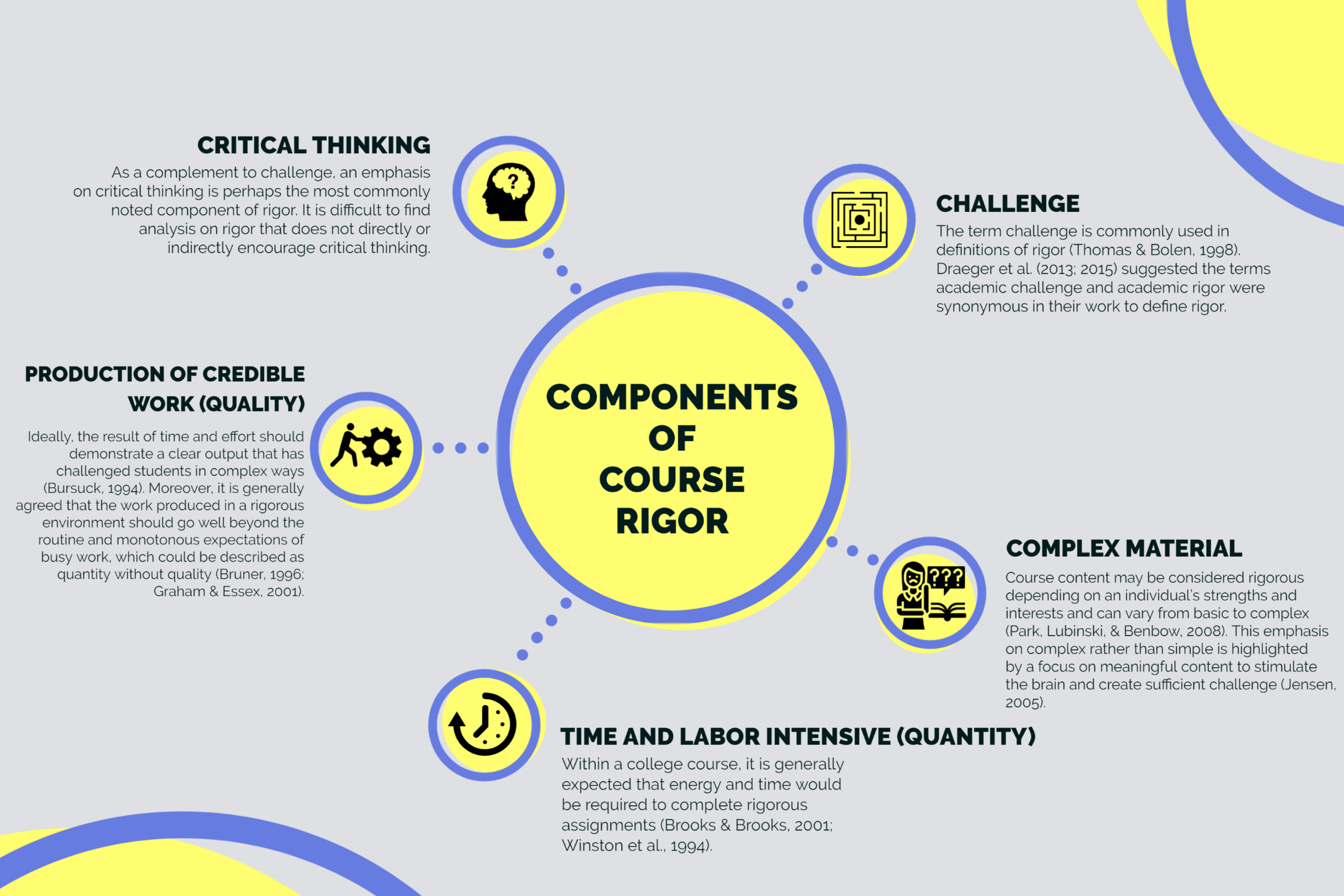

Unfortunately, an evaluation of rigor and its potential impact on course/faculty ratings is scarce. For this study, rigor within individual courses was chosen so that instructor and course could be examined simultaneously. Predictably, an operational definition of course rigor is elusive. Removing the subjective nature of the term to objectively define and evaluate this construct is challenging. Fortunately, Johnson et al. (2019) provided a definition that included the following five components of course rigor.

Critical Thinking

Challenge

Complex Material

Time and Labor Intensive (Quantity)

Production of Credible Work (Quality)

From those five components, Johnson et al. created seven questions designed to be included in tandem with student rating questionnaires. Johnson et al.’s work provided the template used to conduct our study of sport management courses.

Methodologically, our study investigated 830 students in 69 sport management courses over the span of four years to determine if course ratings (i.e., student evaluations), course grades, and course level were related to course rigor. The seven rigor questions developed by Johnson et al. were added to existing student ratings and strongly supported through a factor analysis. Course ratings were distributed at the end of each semester and included three groups of questions that assessed the instructor, the course, and perceived rigor.

We found that the strongest correlations with course rigor occurred for course and instructor ratings. Moreover, when predicting course rigor only the overall ratings scores (combined instructor and course scores) and course GPA were significant. As overall ratings increased so too did the perception of rigor. As course GPA decreased, rigor perceptions increased.

The pragmatic implications of this work are noteworthy for faculty and administrators.

While rigorous coursework may result in lower mean course GPAs, course rigor appears to be appreciated by students. So, the more rigor, the higher student ratings – provided work is appropriate for class level and content area. This finding supports the validity theory (Marsh & Dunkin, 1992).

Because sport management students reported higher instructor, course, and overall ratings when perceived rigor increased (and mean course grades decreased), the grading leniency hypothesis (Marsh & Dunkin, 1992) does not seem to apply.

The fear of retributional bias (Feldman, 2007) should be minimized based on our results. This conclusion does not mean that individual students will not provide negative ratings or engage in retributional behaviors on occasion. Rather, it means at the course level the mean scores over time indicate that if faculty engage in designing and implementing courses with the five elements of rigor, their mean course ratings will likely be improved.

Click here for full research article in Sport Management Education Vol. 14, Issue 1.

References

Arum, R., & Roksa, J. (2011). Academically adrift: Limited learning on college campuses. Chicago, IL: University of Chicago Press.

Feldman, K. A. (2007). Identifying exemplary teachers and teaching: Evidence from student ratings. In R.P. Perry & J.C. Smart (Eds.), The scholarship of teaching and learning in higher education: An evidence-based perspective (pp. 93–129). Dordrecht, The Netherlands: Springer.

Johnson, J. E., Weidner, T. G., Jones, J. A., & Manwell, A. K. (2019). Evaluating academic course rigor, Part I: Defining a nebulous construct. Journal of Assessment and Institutional Effectiveness, 8(1-2), 86-121.

Marsh, H. W., & Dunkin, M. J. (1992). Students’ evaluations of university teaching: A multidimensional perspective. In J.C. Smart (Ed.), Higher education: Handbook of theory and research (Vol. 8, pp. 143-234). New York, NY: Agathon Press.